When CIS becomes less important, what is the pursuit of mobile phone photography?

“When it comes to imaging or taking pictures with mobile phones, the market usually pays more attention to the camera module itself, or the core CMOS image sensor (CIS). At present, the competition in the smartphone CIS market is still very fierce, and more demand is shifting from 8-inch wafers to 12-inch wafers. At the same time, with the increase in the demand for CIS with more than 40 million pixels, the pixel process node is also becoming smaller.

“

When it comes to imaging or taking pictures with mobile phones, the market usually pays more attention to the camera module itself, or the core CMOS image sensor (CIS). At present, the competition in the smartphone CIS market is still very fierce, and more demand is shifting from 8-inch wafers to 12-inch wafers. At the same time, with the increase in the demand for CIS with more than 40 million pixels, the pixel process node is also becoming smaller.

This change is probably not good news for Sony, which has the largest mobile CIS market share. In August of this year, a set of unverified data from a source appeared on Twitter, pointing out that in the first and second quarters of this year, Samsung and Sony’s image sensor market shares shrank to the closest ever. Sony’s image sensor market share dropped to 42.5% in the second quarter of this year, while Samsung’s rose to 21.7%. From the perspective of “International Electronics Business”, this is related to the advantages of Samsung and even more market players, such as SK Hynix, in the process technology related to high pixels.

There may be a shift in the value of the imaging market. Since smartphones occupy the largest market share in the imaging field (Yole Developpement data in the middle of last year shows that mobile CIS accounts for 70% of the entire CIS sales), this article mainly takes the application of smartphones as an example to talk about the imaging market. The transformation that is taking place – the market originally dominated by CIS is gradually shifting to image/vision processors, such as AI cores, ISP (image processor), etc. This change will create greater market value.

In addition, the particularity of smartphone imaging lies in the fact that imaging in other fields, such as medical imaging, machine vision in the industrial field, etc., at the image sensor level is mainly aimed at “taking pictures”, and pays more attention to the post-processing and calculation of image data. . Mobile phone photography has always been the main goal of “shooting well”, and its emphasis on image sensors has a long history.

Smartphone makers still prefer the high pixels and large size of the CIS itself when promoting the selling point of its photography. However, the determinant of imaging quality has shifted from CIS to the processing and calculation of image data. It also reflects the technological development of digital chips and the rapid advancement of AI technology to challenge the development of traditional optical technology.

Signs that started to appear two years ago

MediaTek proposed the concept of “true AI camera” in 2018. The concept includes three main factors: 1. High pixel, large size CIS; 2. Multi-core ISP; 3. High-performance AI core. Among them, the first point is the consensus in the imaging field, and the last two points are related to the post-processing of image data.

If ISP is processing data, then AI and other vision processors are doing more in-depth computing on data. The importance of ISP has always been mentioned repeatedly in the past, but its position in the imaging field, especially in mobile phone photography, is far less than that of CIS. In addition, the AI core is also a sweet pastry in the imaging field for the past two years. On this basis, the marketing concept of “true AI camera” is essentially to attract terminal equipment manufacturers to adopt MediaTek’s SoC products, but it really raises the ISP and AI cores to the same height as CIS .

Whether it is the ISP specially equipped for the camera or the AI processing unit, their application in photography can be considered as the popular Computational Photography in the past two years. The general public’s understanding of “AI photography” is probably still at the level of beauty, face recognition, removing the background or making the sky bluer and the grass greener. In fact, AI’s assistance in imaging has gone deep into all aspects of taking pictures, which will be discussed below.

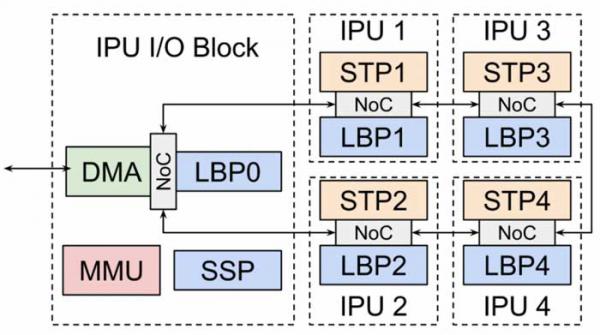

In addition to chip manufacturers such as MediaTek, Google’s performance is also worthy of attention. According to “International Electronic Business”, Google equipped its Pixel 2 mobile phone with a dedicated Pixel Visual Core (Pixel Visual Core, Figure 1) in 2017, which is an Arm-based SiP package image/visual designed by the company. processor. This processor can be seen as a fully programmable image, vision and AI multi-core domain-specific architecture chip, and its application is iterated on Pixel 4 as Pixel Neural Core (Pixel Neural Core).

Of course, Google’s Pixel series of mobile phones are generally more forward-looking and water-testing in the mobile field. Google has many years of precipitation in the field of Computational Photography. They believe that compared with Qualcomm’s ability to provide ISP and AI Engine inside the SoC, it is more efficient to develop dedicated image processing hardware for photography.

Figure 1. Inside the Pixel Visual Core of a Pixel phone

In the pre-smartphone era, external ISP/DSP was a common concept, but with the advent of the general trend of chip integration, contemporary image processing hardware rarely exists outside the SoC in an independent form. This approach of Google further enhances the status of image/vision processors: although the solution of an external independent image/vision chip may not become a trend, post-processing has become a more important part in all aspects of photography .

Google Pixel phones have a more interesting tradition: the same model of CIS can be used on two generations of Pixel phones in a row. For example, the main cameras of Pixel 3 and Pixel 4 both use CIS that is suspected to be Sony IMX363. Even so, the camera performance of mobile phones will still leap forward, and this feature has always been talked about by people. It also shows that Google is putting a lot of emphasis on image processing in imaging, not just image sensing.

Let’s look back at this year’s Qualcomm Snapdragon 865 for imaging: the ISP part of the Snapdragon 865 supports a speed of 2 GigaPixels per second, as well as 4K HDR, 8K video shooting, and up to 200 million pixel photo shooting. In cooperation with the fifth-generation AI Engine, this ISP can quickly identify different shooting backgrounds, people, and objects. Today, Qualcomm focuses on the imaging in each generation of Snapdragon flagships.

Looking at the A14 released by Apple this year, the performance improvement of its CPU and GPU is not large, but the Neural Engine in the AI core part has been increased to 16 cores, which has increased its computing power to 11TOPS; A14 Also inside the CPU is an upgraded machine learning AMX module (matrix multiplication accelerator). Nowadays, AI processors on mobile phones are always criticized for not having many application scenarios, but they are quietly playing a role in Computational Photography.

The increasingly clear market situation

Sony launched two “smart vision sensors” in May this year – the IMX500 and IMX501. The company claims that this is the world’s first image sensor to incorporate AI processing capabilities. The sensor part of these two chips is a typical back-illuminated CIS; the integrated edge AI processing part includes the logic chip of the DSP and the temporary storage space required by the AI model, which is a typical edge AI system. More strictly speaking, IMX500/501 should not be defined as “sensor” only.

In the case of cooperating with cloud services, these two chips only obtain metadata as output in the data processing stage, which can reduce data transmission delay, power consumption and communication costs. The essence of this type of design is to integrate some of the “post-processing” capabilities into the image sensor. This enables more accurate, real-time object tracking while recording video. Currently, these two sensors are mainly used in retail and industrial equipment.

In addition, in terms of supporting solutions, Sony has also launched a software subscription service for CIS that integrates AI capabilities. The potential market value of adding AI data analysis is greater than the sensor market itself. While Sony doesn’t expect the service to be profitable in the short term, it is very bullish on its long-term growth. Even if the IMX500/501 is not aimed at smartphone products, this step can also reflect the change of Sony’s thinking in CIS business development: that is, it starts to expand from simple image sensing to image/visual processing. After all, the growth rate of the traditional CIS market is slowing down.

In the middle of this year, Yole Developpement released a report titled “2019 Image Signal Processor and Vision Processor Market and Technology Trends”. The report clearly states: “AI has revolutionized the hardware in vision systems, with implications for the entire industry.”

“Image analysis adds a lot of value. Image sensor vendors are becoming interested in integrating a software layer into the system. Today’s image sensors have to go beyond the ability to simply capture an image and then analyze the image.”

“But running such software means high computing power and storage requirements, and the emergence of visual processors. The compound annual growth rate of the ISP market from 2018 to 2024 will be stable at 3%, that is, the market value of ISPs will reach 3%. It will reach $4.2 billion in 2024. At the same time, the vision processor market will also usher in explosive growth, with a compound annual growth rate of 18% from 2018 to 2024, and its market value will reach $14.5 billion by 2024.”

Figure 2, 2018-2024, image/vision processor shipments and market size expectations Source: Yole Developpement

Of course, this value has not yet reached the total annual value of CIS. The sum of the above two markets will only approximately exceed the size of the CIS market this year (the output value of the CIS industry is expected to be US$17.2 billion this year). It should also be noted that the growth rate of the CIS market is slowing down and the software market for vision processing chips is not considered here. At least Sony believes that its long-term market development potential is greater than the CIS market itself. Forecast data from Yole Developpement shows that ISPs will gradually lose their share of the market, while more computationally-focused vision processors are clearly in demand (Figure 2).

“It’s worth noting that many traditional industry players are more constrained when dealing with AI trends. This also allows other players to join the business competition, such as Apple, Huawei, startups like Mobileye, and even other fields. companies, like Nvidia.” This is a manifestation of the depth of the imaging market.

What exactly does AI bring to mobile phone photography?

In March of this year, DxOMark, a well-known French imaging laboratory, mentioned in an article that in the past 10 years, the picture quality of smartphone photos has improved by more than 4EV, of which 1.3EV comes from the improvement of image sensor/optical technology, and 3EV comes from image/optical technology. Improvements from vision processors (post-processing of image data). This has basically subverted the public’s basic understanding of improving the quality of photos, that is, to improve the CIS technology.

And image/visual processing is a fairly old and developed issue for many years, AWB (automatic white balance), ANR (active noise reduction), 3DNR (3D noise reduction), BLC (black level correction), HDR, etc. originally belonged to ISP regular items. In the past two years, AI photography has been mentioned the most functions in image post-processing, including face recognition, object recognition, semantic segmentation, intelligent beauty and so on.

These are indeed the value that AI brings to imaging, but the quality improvement of AI’s participation in mobile phone photography has penetrated into the above-mentioned routine projects. A lot of Google’s research in Computational Photography also involves these components, such as automatic white balance in low-light scenes, where traditional algorithms are powerless to correct white balance. Google applied machine learning a few years ago: by feeding the model a large number of photos with well-corrected white balance, it trained an intelligent model for automatic white balance.

Google applies machine learning to many aspects and features of the Pixel phone’s imaging. For example, real-time HDR when taking pictures, and anti-shake when shooting videos. In the data post-processing, firstly, motion analysis, acquisition of gyroscope signals, combined with optical anti-shake operation are performed in the first stage; secondly, machine learning and signal processing are combined in the motion filtering link to predict the motion trajectory of the camera itself; finally, the final The frame synthesis link compensates for the picture distortion caused by the shutter and fretting.

Figure 3, Source: Google AI Blog

A more typical example is to simulate a bokeh effect. The traditional solution to simulate background blur mainly relies on stereo vision, while the solution proposed by Google not only relies on two stereo vision solutions (the dual camera and dual pixel technology of the Pixel 4 mobile phone), but also in order to enhance the reliability of the blur, the object of the picture is Do Semantic Segmentation: Google built a five-camera device, shot a lot of scenes, and collected enough training data. Use Tensorflow to train a convolutional neural network: first, the input data of dual pixels and dual cameras are separately processed, and an encoder in the middle encodes the input information as IR (intermediate layer), and then the two parts of information pass through another encoder to complete the final Object depth calculation (Fig. 3). The encoder here is itself a kind of neural network.

In April, researchers at MediaTek published a paper titled Learning Camera-Aware Noise Models, proposing a method for modeling image sensor noise through “a data-driven approach to learning from real ambient noise. Noise model. This noise model is camera-dependent, and different sensors have different noise characteristics, and they can all be learned.”

These examples show that more and more market players at different levels are investing in image post-processing. Therefore, the Google Pixel mobile phone using the old model CIS still maintains an advantage compared with other mobile phones using CIS with hundreds of millions of pixels in many imaging project duels. The solution of an external AI vision chip obviously gives Google more room to play.

Today’s mobile phones have begun to widely use AI to enhance image quality, and include the participation of traditional links such as framing, noise suppression, and automatic white balance. From the user level, AI chips will not have strong perception when participating in computing.

As these techniques become more common in the imaging field, the CIS-only centric theories of mobile imaging of the past are increasingly ineffective. Today’s terminal manufacturers have shifted the focus of competition to image/visual processing and computing when shooting mobile phones. After all, the speed of development of traditional optical technology cannot be compared with that of digital chips.

Nowadays, many people take photos with mobile phones to compare with full-frame cameras. Even if such a comparison has no practical significance, it can also reflect the image/visual processing computing power of mobile phones, which largely makes up for the shortcomings of mobile CIS. In fact, this is also a competition between two schemes and two eras.

The Links: SKM50GB123D LM215WF3-SLE1 MY-IGBT